1st Rule of Machine Learning

...

Machine learning (ML) is a powerful tool, but it's not always the first solution we should turn to…

Rule 1 of Machine Learning:

“Don’t use Machine Learning”

.

No Machine Learning you say?

Diving straight into ML can be tempting, especially with the buzz it has generated. However, ML requires a robust data pipeline, high-quality labels, and most importantly, a clear understanding of the problem.

Anyone with experience working at a large organisation will tell you of the troubles of getting large, high quality usable data - it’s tough! Machine learning thrives on data. While it's possible to repurpose data from one problem for another, simple heuristics can often achieve half the results of a full-blown ML model, but in a fraction of the time. Not only that, often, the initial iteration of a solution doesn't even involve ML.

If you’re finding it tough to take this from me, here is what some of the pioneers of the industry have to say:

.

Google's 43 Rules of Machine Learning echo this sentiment with its first rule:

Machine learning is cool, but it requires data. Theoretically, you can take data from a different problem and then tweak the model for a new product, but this will likely underperform basic heuristics. If you think that machine learning will give you a 100% boost, then a heuristic will get you 50% of the way there.

.

Yann LeCun, Chief AI Scientist at Facebook.

"The complexity of your algorithm should not exceed the complexity of your data. If your data is telling a simple story, listen to it." - Yann LeCun, Chief AI Scientist at Facebook.

It is quite an intuitive approach: if the data suggests simplicity, we shouldn't complicate it with high-level algorithms. Sometimes, the most straightforward approach leads to the most transparent, understandable, and actionable insights.

.

Greg Corrado, Senior Research Scientist at Google.

"With big data, you can see patterns and trends that lead to insights and improvements in business processes, but it's not necessary to start complex. Simple algorithms can give you just as valuable insights." - Greg Corrado, Senior Research Scientist at Google.

This underscores the value of simplicity, especially in the initial stages of data analysis. Big data doesn't always call for big algorithms; often, the initial golden nuggets of insight come from the simplest statistical analyses.

.

Amit Ray, Compassionate Artificial Intelligence:

"Emotions are essential parts of human intelligence. Without emotional intelligence, artificial intelligence will remain incomplete." Amit Ray, Compassionate Artificial Intelligence

The nuances of human emotion and intelligence are not always quantifiable, and sometimes a human touch — a simple, empathetic approach — can provide what complex algorithms cannot.

Peter Norvig, Director of Research at Google Inc.

"Simple models and a lot of data trump more elaborate models based on less data." - Peter Norvig, Director of Research at Google Inc.

Norvig's pragmatic approach highlights the practical side of machine learning and a philosophy that I believe has also worked well in large corporation. It's not about the complexity of the model but the quality and quantity of data that feeds into it. A simple model with a wealth of data can often provide more accurate and reliable insights than a complex model starving for data.

Geoffrey Hinton, Professor at the University of Toronto.

"Remember that a model is a simplified representation of reality, and the goal is not to make it complex but to make it as simple as possible while still capturing the essence of the problem." - Geoffrey Hinton, Professor at the University of Toronto.

Hinton brings us back to the essence of machine learning, the essence of modelling: representation of reality. The goal is to capture the core of the problem with as much clarity as possible, not to lose ourselves in the intricacies of complex modelling.

Where do you start then?

.

Heuristics are a good first start but we’ll come onto that later - let’s first start with data. Regardless of whether you’re using simple rules or deep learning, it helps to have a decent understanding of the data. Grab a sample of the data to run some statistics and visualise! Knowing your data is so underrated in data science and machine learning. Understanding your data and gaining the domain knowledge is truly half the battle.

When you have a problem, build two solutions - a deep Bayesian transformer running on multicloud Kubernetes and a SQL query built on a stack of egregiously oversimplifying assumptions. Put one on your resume, the other in production. Everyone goes home happy — Brandon Rohrer

.

Apply traditional statistical methods directly.

- Correlation

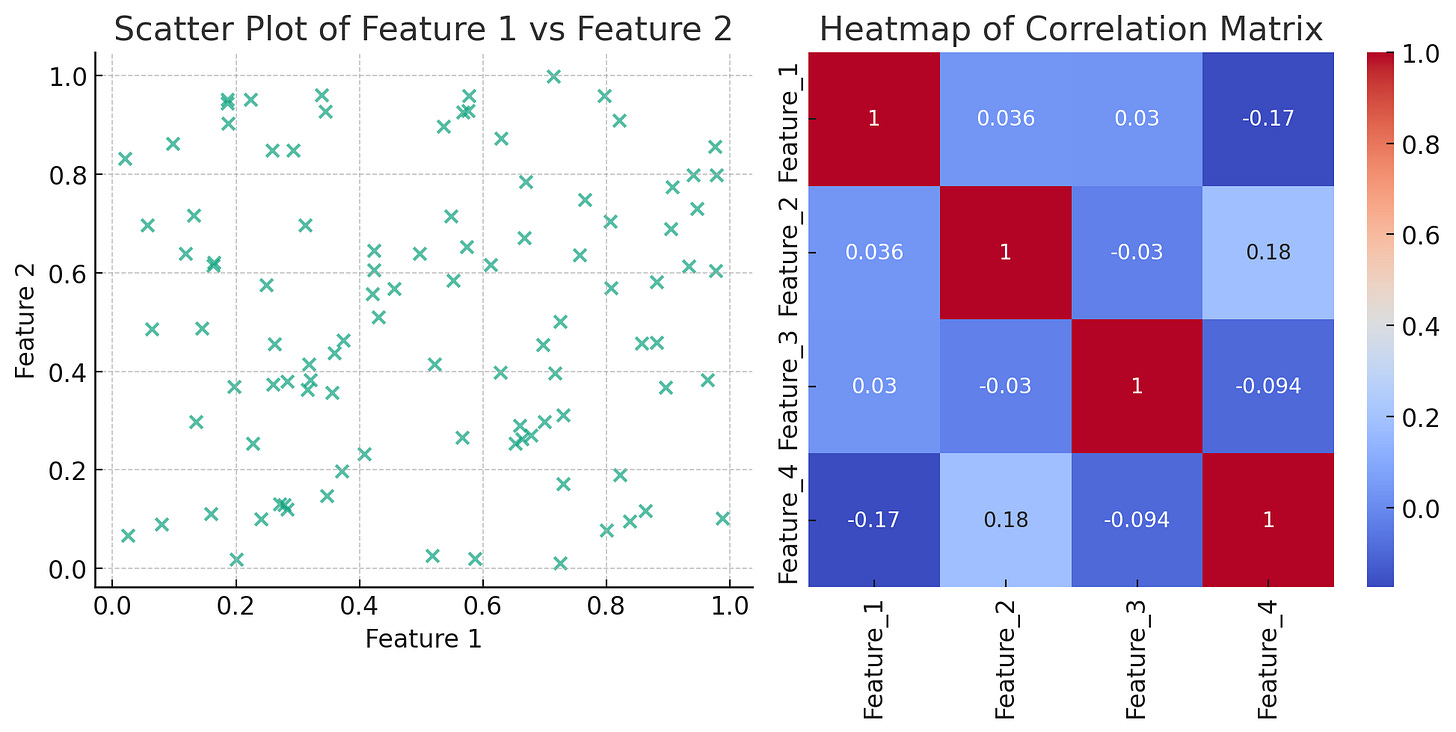

Simple correlations help with figuring out the relationships between each feature and the target variable. Then, we can select a subset of features, with the strongest relationships, to visualise. Not only does this help with understanding the data and problem—so we can apply machine learning more effectively— we also gain better context of the business domain. Sometimes, the interaction between different features can have a significant impact on the target variable. Techniques like pair plots or correlation matrix heatmaps can help visualise the relationships between multiple features simultaneously.

It's crucial to remember that correlations and aggregate statistics can be misleading - for example, variables that have strong relationships can appear to have zero correlation due to non-linear relationships or outliers. This can of course be mitigated by considering Spearman's rank correlation or Kendall's tau as alternatives as well as many other techniques.

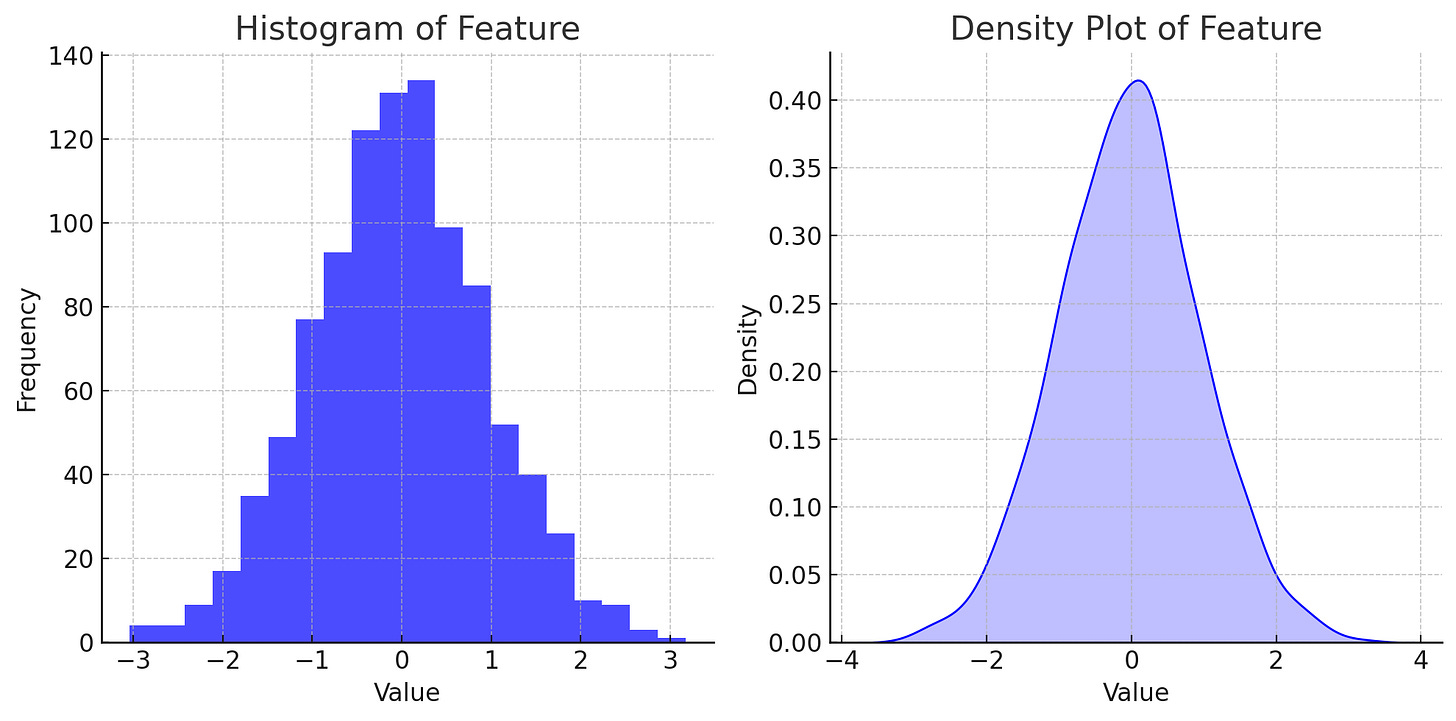

- Data Distribution

Visualising the distribution of your data using techniques like histograms or density plots can help you understand the underlying distribution of your data, identify skewness, and spot outliers. This step is vital because the choice of machine learning model and the necessity of data transformation techniques (like normalisation or standardisation) can depend heavily on the distribution of your data.

Rule 3 from Google’s Rules of ML.

Choose machine learning over a complex heuristic.A simple heuristic can get your product out the door. A complex heuristic is unmaintainable. Once you have data and a basic idea of what you are trying to accomplish, move on to machine learning… and you will find that the machine-learned model is easier to update and maintain.

.

.

If you haven’t already read the Data Science One-Pager, check it out here:

Kaggle Competition

.

Kaggle famously hosted the M5 Forecasting Competition - the task: “Estimate the unit sales of Walmart retail goods”. This task was particularly complex for a few reasons:

1 - There were over 30,000 product and store combinations and therefore 30,000+ time-series models to forecast

2- There was a strong hierarchical structure, a product can belong to a department, in a particular store, in a particular state

3- Each time series for sales of each product had varying length.

Now, YES - the top performing models were all “ Pure ML models ”- however, a simple exponential smoothing outperformed 90% of all models.

Exponential smoothing is considered a low-pass filter. It is a technique used in time series forecasting that applies decreasing weights to past observations, with the most recent observations given more weight.

This highlights that sometimes simpler models can be quite powerful, even in the face of complex machine learning algorithms.

90% of participating teams in the M5 Kaggle competition failed to outperform exponential smoothing

Heuristics

Before diving into complex machine learning models, can we solve the problem with simple rules or baseline methods? You'd be surprised how often the answer is yes. Heuristics offer us a reality check against our ambitions. They serve as a robust baseline to compare the performance of our machine learning models against. If a simple rule gives you 80% of the value with 20% of the effort, it's worth asking whether the additional complexity of machine learning is justified.

Heuristics also play a vital role in the early stages of a machine learning project by providing a source of "quick-and-dirty" labels. This approach, known as weak supervision, is invaluable when you don't have the luxury of a large labeled dataset to train your models.

Examples of heuristic approaches include:

Creating decision rules based on domain knowledge.

Segmenting data into meaningful groups and analysing the characteristics of these segments.

Using simple forecasting methods as baselines for time series data, like the naive approach or seasonal decomposition.

.

Remember, the goal of this in-depth analysis is not just to apply machine learning for the sake of it, but to truly understand the problem, the data, and the context in which they exist. This holistic understanding will guide you in selecting the right approach, whether it's a simple heuristic, a sophisticated machine learning model, or something in between.

So, when should we turn to machine learning? When the simple solutions have been exhausted, when the heuristics start breaking under their own complexity, and when you have sufficient high-quality data that justifies the investment. Machine learning isn't a magic wand—it's a tool in our toolkit, and it's most effective when used judiciously.